Simultaneous Localization and Mapping

If you’ve read anything about indoor mapping, UAVs, autonomous cars, or any other technology that relies on a good understanding of the world around it, you will likely have come across the term “SLAM”. It stands for Simultaneous Localization And Mapping and it is a collection of algorithms that automates the process of determining where you are and how you’re moving relative to the objects around you. It’s one of the many things our own brains do without us being consciously aware and it’s becoming ubiquitous in vehicle automation and mapping applications.

Tracking the Movement of Objects

When watching a 2D movie, we get a sense of motion from observing a change in perspective of the different objects on the screen. A rotating building gives us the sense of flying around it. Trees whipping past indicate speeding forward. A tilting horizon gives a feeling of rolling and pitching. These senses can be so strong that it may cause motion sickness, even in the comfort of our living room.

SLAM does all this. By searching sequential image frames for a common object, it can track the motion of the object relative to the observer (or vice versa). By tracking multiple objects assumed to be stationary, the SLAM algorithm can start to build up a solution for the observer’s motion. To a photogrammetrist, this concept is the same as a bundle adjustment solving for photo centers and exterior orientation. By tracking every object in the image and recording the position of each, SLAM starts to build up a map of the environment even as it solves for the observer’s motion through that environment.

Precise Indoor Positioning

Like any relative positioning system and like any bundle adjustment with poor geometry, there is drift. Drift is the accumulation of small errors in each step that result in large errors over time. Like a traditional surveying traverse, drift can be managed by returning to known points, a process that is called “loop closure”. This assumes that the drift is predictable and that the objects being used are properly identified as known points.

Another source of error in SLAM comes from a lack of features. A blank wall or tunnel with no distinguishing features provides no information that could be used to update a user’s position. This is usually worked around with dead-reckoning or other assumptions about the observer’s motion.

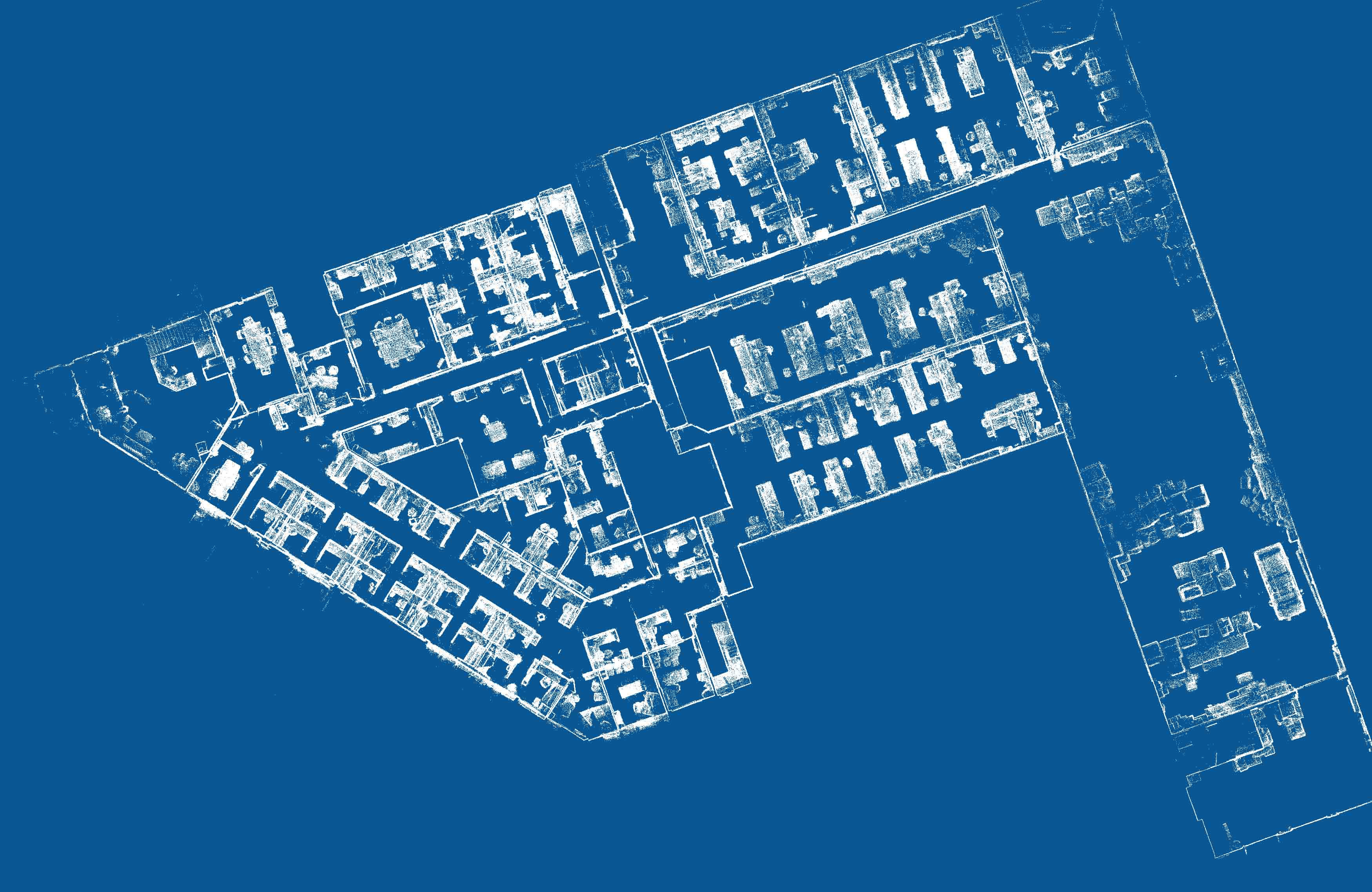

TIMMS

While TIMMS isn’t strictly a realtime application it does utilize this technology to provide a very high level of accuracy from a moving platform. At the core of TIMMS is an inertial navigation system that can provide relative position without any dependence on observable features. The ability to input survey control also constrains or eliminates other error sources. In TIMMS, SLAM is used as a refinement step, ensuring that no matter how many times an operator walks down the same hall or past the same desk that the final result includes only one surface for the wall and only one location for the desk. This allows TIMMS to overcome the challenges of surveying from a moving platform and achieve a similar level of accuracy as static scanning.